I was a young boy when elevator operators still closed those see-through, metal accordion interior elevator doors by hand and then moved the elevator up or down by rotating a knob on a wheel embedded in the elevator wall.

Within a few years all those operators were gone, replaced by numbered buttons on the elevator wall. Today, so many activities that used to be mediated by human judgement are now governed by algorithms inside automated systems.

Apart from the implications for elevator operators and others displaced by such technology, there is the question of transparency. It’s easy to determine visually whether an elevator door is open and the elevator is level with the floor you’re on so that you can safely exit.

As the world has seen to its horror twice recently, it’s harder to know whether software on a Boeing 737 MAX is giving you the right information and doing the right thing while you are in mid-flight.

Yet, more and more of our lives are being turned over to software. And, despite the toll in lives; stolen identities; computer breaches and cybercrime; and even the threat that organized military cyberwar units now pose to critical water and electricity infrastructure—despite all that, the public, industry and government remain in thrall to the idea that we should turn over more and more control of our lives to software.

Of course, software can do some things much better and faster than humans: vast computations and complex modeling; control of large-scale processes such as oil refining and air transportation routes; precision manufacturing by robots and many, many other tasks. We use software to tell machines to do repetitive and mundane tasks (utilizing their enormous capacity and speed) and to give us insight into the highly complex, for example, climate change.

As long as we were using software to control discrete processes, its failures could be localized. But in an increasingly connected world, those failures can cascade across entire financial, business, government and other networks, bringing parts of our lives to a halt. The advent of the so-called Internet of Things threatens to bring such cascades right into our homes.

Two manifestly unreliable assumptions continue to stalk us when it comes to our enthrallment to software. First is the benign assumption, the assumption that new technologies will only be used with good intentions to promote the well-being of individuals and the societies they live in. Even though most people when presented with this assumption will acknowledge that it is not true, they continue to embrace new technologies as if it were true. Witness the largely uncritical uptake of the Internet of Things, a dangerous tsunami of disaster waiting to happen if there ever was one.

The second assumption is that all human activities can be reduced to a set of instructions. There are lots of issues here, but I’ll touch on just two. First, instructions are formed using words, and words by their very nature are ambiguous. They have multiple meanings in various contexts. Each person interprets the meaning of words based on his or her life experience and perception of the context. Thus, even when two people are using the same word in what appears to be the same context, they may be communicating slightly or even wildly different meanings.

My favorite illustration of this difficulty is two people using the word “God.” The fact that some people might spell the word with a lowercase “g” and some might add an “s” on the end just scratches the surface when trying to understand what people mean by this word.

Second, the idea that the boundaries of a particular activity can be clearly defined are questionable. If I am describing the baking of a cake, do I include all the activities of making up a list for shopping, buying the ingredients and then bringing them home? Do I include calling my aunt to ask her whether she does something special to make her cake taste so good?

It’s not easy to define what an “activity” is. The permutations may be few or proliferate into the thousands or millions.

The unbounded faith that software can tackle just about anything humans can finds its origins in a 1958 essay by mathematician John Von Newman entitled “The Computer and the Brain.” Rather than being seen as an interesting but highly imperfect analogy, the essay has passed into the realm of fact governing the minds of those who imagine a world ruled by software.

Even neuroscientists have been bewitched by this analogy assuming what they call intelligence is located in the brain rather than assuming that it is a distributed property and depends on the whole body and field of action of a person. These scientists are working from a story about how the mind works and where it is located and fitting their “data” to that story.

Stories are useful guides. Humans live by narratives. Narratives give meaning and structure to our experience. But narratives are not “the truth” or “reality.” They are but one version, usually a very incomplete version. And, any reality that we attempt to describe on one day has already shifted by the next.

When I think back to those elevator operators in their white gloves and well-pressed uniforms, I grow a little nostalgic for the time when people were running the elevators I was riding in. Operators who have their hands directly on the controls tend to inspire the confidence that a real-time flexible response is possible if something unexpected happens on the way to the 40th floor.

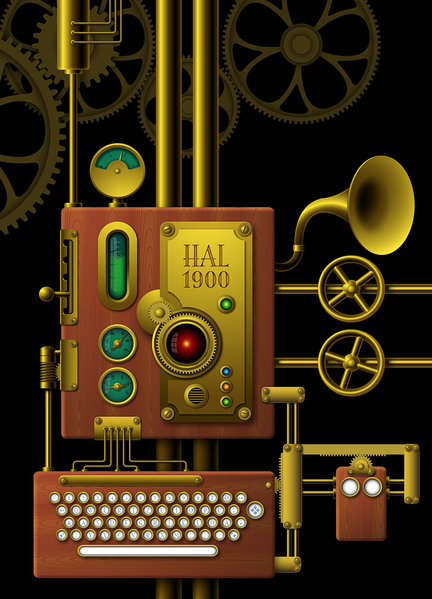

Image: Illustration of a fictional Steampunk computer (2013). A tribute to the computer HAL 9000 from Stanley Kubricks film ”2001: A Space Odyssey”. Grafiker61. Via Wikimedia Commons https://commons.wikimedia.org/wiki/File:Steampunk-HAL.png