The death of a pedestrian during a test drive of a driverless vehicle (even as a backup human sat in the driver’s seat) calls into question not just the technology—which didn’t seem to detect the pedestrian crossing a busy roadway and therefore didn’t brake or swerve—but also the notion that driving is nothing more than a set of instructions that can be carried out by a machine.

The surprised backup driver seemed to have confidence in the inventors of driverless cars as he was looking down at his computer briefly just before impact.

Certainly, a real human driver might have hit this pedestrian who was crossing a busy street at night with her bicycle. But, of course, as a friend of mine pointed out, there is a big difference in the public mind between a human driver hitting and killing a pedestrian and a robot killing one. If the incident had involved a human driver in a regular car, it would probably only have been reported locally.

But the real story is “robot kills human.” Even worse, it happened as a seemingly helpless human backup driver looked on. The optics are the absolute worst imaginable for the driverless car industry.

It makes sense to me that a world of exclusively driverless cars with a limited but known repertoire of moves might indeed be safer than our current world of human drivers. But trying to anticipate all the permutations of human behavior in the control systems for driverless car systems seems like a fool’s errand. I’m skeptical that the broad public will readily accept a mixed human/robot system of drivers on the roads. You can be courteous or rude to other drivers on the road or to pedestrians on the curb. But how can you make your intentions known to a robot? How could a pedestrian communicate with a robot car in the way that approaches the simplicity of a nod or a wave to acknowledge the courteous offer from a driver to let the pedestrian cross the street?

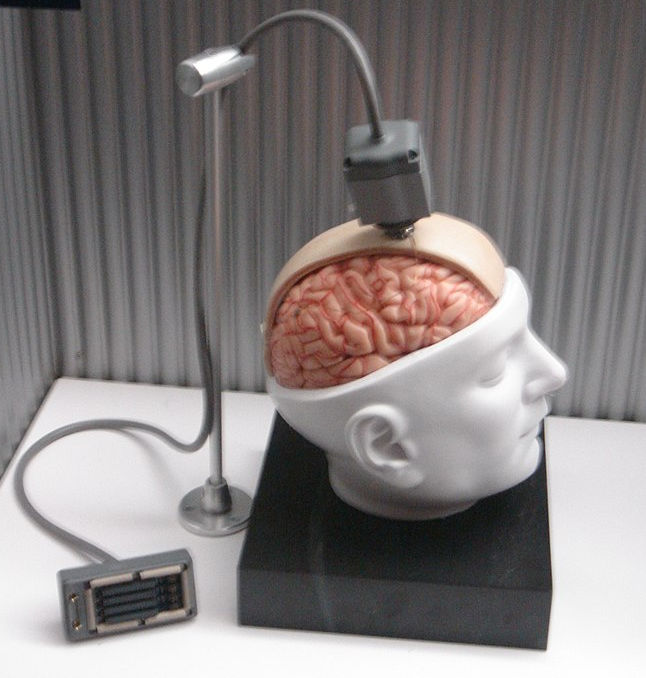

The idea that we can capture the complexities of human cognition, decision-making and even personality well enough to mimic them finds gruesome company in another idea that made the news recently: a startup firm that offers to preserve your brain in a chemical solution in the hope that the brain’s content can be uploaded into some future advanced technological matrix where you can live again.

The catch is that the brain must be harvested before death. In other words, you must consent to be euthanized.

Even if one gets past this fatal fact, there is the problem of semantics. The founders say on their company website that they are “[c]ommitted to the goal of archiving your mind.” The brain is a specific identifiable organ in the body that has an agreed upon location and morphology. The mind is a much vaguer concept. We say the mind is the part of us that thinks, but also, according to the dictionary meaning, the part that feels, judges, reasons, wills, or even just perceives.

It is hard to imagine the mind doing all these things without the body or, for that matter, the world around us. Mind posits vast ongoing connections of which the brain is only one aspect. Instead of conceiving of the brain as a command center, we might also think of it as a switchboard. Can we expect to get all the properties of a network by merely preserving the switchboard?

Beyond this we forget that other cultures have put what we call mind within the confines of the heart. Even today, the link between consciousness and the heart warrants study. And, there is considerable evidence for a brain of sorts in the human gut, one that profoundly influences our moods. So much for our feeling lives being merely in our brains. Once again, it is hard to imagine the life of feelings divorced from our bodies. We say we feel joy in our hearts or a tingling in our spines or butterflies in our stomachs. It turns out we do!

Just as we use the metaphor of the body to discuss the structure of organizations—we say so and so is the head of the company—we must recognize that the head as the seat of command for an individual is merely a metaphor. A captain barks orders from the mouth in his head. It seems that his head is in charge. But the orders themselves require coordination of the lungs and the vocal chords and ultimately use information coming in through all the senses from the environment. Moreover, identical orders take on different meanings depending on the body language and tone of voice of the captain. He or she might be telling a joke in the form of a ridiculously inappropriate order, something one cannot understand from the words alone.

The idea that we might duplicate ourselves on the machine model has fascinated humans at least since Mary Shelley wrote her famous novel Frankenstein. The machine, of course, is just another metaphor for conceiving how humans work, and it’s one far less sophisticated that those models offered by biology and ecology.

The most sophisticated attempts today to mimic human cognition and action go by the name artificial intelligence (AI). It is important to emphasize the word “artificial” in this description. No doubt AI can automate many more functions in society not amenable to our previously crude methods. But it is doubtful the AI will ever equate to human intelligence and action. For in order to reproduce human intelligence and action, we would need to reproduce the body and its access to all interactions with the social and physical environment. Even if we were intelligent enough to perform this monumental feat, there is no way for us to get outside the system in which we live to observe all the aspects we want to reproduce. And, of course, our actions and those of countless other beings, plants and physical processes keep altering the system we seek to reproduce.

The belief that machines will someday be “smart like humans” is based on one imperfect metaphor on top of another: brain as a command center in a body modeled as an electrochemical machine. Machines are already “smarter” than humans in some ways. They can calculate answers to vastly complex equations that model weather and climate, for example. They can manage and monitor complex systems such as oil refinery operations or bank transactions with unparalleled speed and accuracy.

But, human cognition is not a thing. It cannot be reproduced without reproducing the entire system within which it operates. Human cognition emerges out of the system we live within rather than merely being embedded in it. Cognition is a process rather than a result. But so are the whole host of other processes we attribute to humans: feeling, judging, willing, and perceiving.

To reiterate, we humans cannot get outside the system in which we live. We are forever participant/observers, doomed to know the universe in which we live in only a partial manner dictated by our limited senses and the extensions we’ve been able to design for them.

We are indeed the human/tool hybrid described by William Catton as homo colossus in his pathbreaking book Overshoot. But we are still human which, as it turns out, is a complex construction involving us and everything around us, something that no amount of AI or other computer intelligence will ever be able to describe or reproduce completely.

Photo: Dummy BrainGate interface at the Star Wars exhibition at the Boston Science Museum in October 2005. Photo by Paul Wicks. Via Wikimedia Commons.